Most technology changes that affect families do not arrive with fanfare. They show up quietly, tucked into system updates or policy adjustments, and only become noticeable once behaviour starts to change.

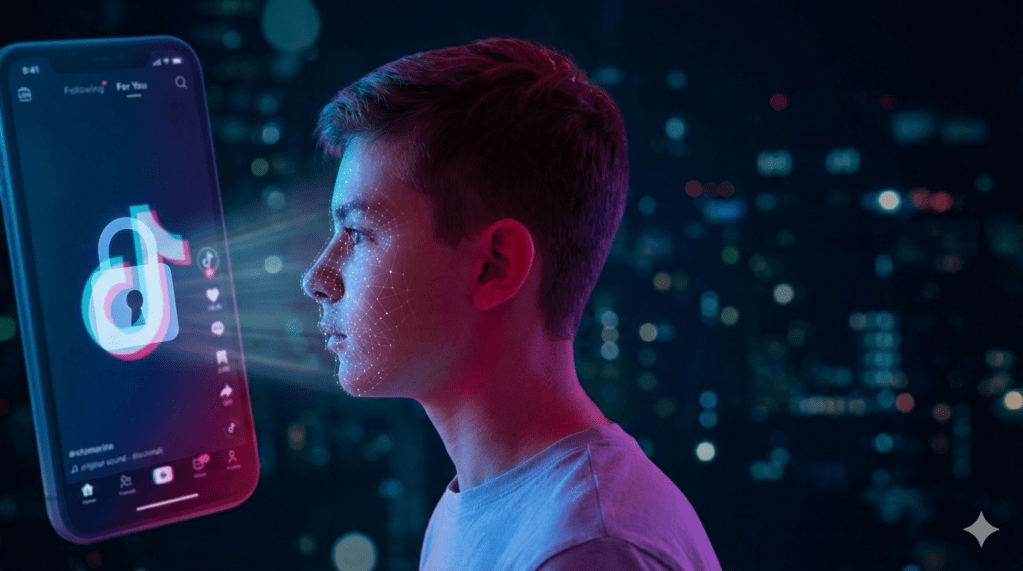

TikTok’s decision to roll out automated age detection tools across Europe falls squarely into that category.

Instead of relying only on users to type in a date of birth, TikTok will now use a mix of profile information, content signals and behaviour patterns to identify accounts that are likely being run by children under 13. Those accounts are then reviewed by a human team, rather than being automatically removed.

On the surface, this might sound like a technical compliance move aimed at regulators. In reality, it signals something more important. Platforms are being pushed to take responsibility for knowing who is actually using their services, rather than pretending that self declared information is enough.

For parents, educators and anyone thinking about how technology fits into daily life, that shift is worth paying attention to.

Why the old approach never really worked

For years, online platforms have relied on a simple system. Ask users how old they are, trust the answer, and build safety rules on top of that assumption.

Anyone who has spent time around children knows how fragile that model is. Kids are curious. They want to explore the same digital spaces as older siblings or friends. Typing in an older birth year is rarely seen as lying. It is seen as unlocking access.

That means many of the safeguards designed to protect younger users were built on unreliable foundations. The rules existed, but enforcement depended on honesty from the very people the rules were meant to protect.

TikTok’s new approach accepts that reality. It does not assume bad intent, but it does assume that age declaration alone is not enough.

Automation is becoming part of everyday safety

This change also reflects a broader pattern across technology. Automation is no longer just about speed or efficiency. It is increasingly being used to make judgement calls that used to sit with people or were avoided altogether.

In this case, automation is being used to flag risk. The system does not make a final decision. It surfaces accounts that deserve closer attention.

That distinction matters. Fully automated decisions about identity or access can be dangerous. But automation used as an early warning system, backed by human review, can meaningfully improve outcomes.

For families, this means safety is no longer just about settings and parental controls. It is also about how platforms quietly monitor behaviour behind the scenes.

What this means for parents and educators

For parents, this rollout is not something that requires immediate action, but it does change the conversation.

It signals that platforms are beginning to accept responsibility for detecting risk, rather than placing the entire burden on families. That does not replace conversations about online behaviour or boundaries, but it does add a layer of protection that previously did not exist.

For educators, it highlights how digital literacy is evolving. Understanding how platforms make decisions is becoming just as important as understanding what content students see. Age, access and safety are no longer static settings. They are dynamic systems shaped by data and behaviour.

It also reinforces the importance of transparency. As these systems become more common, families and schools will need clearer explanations of how decisions are made and how mistakes are handled.

The regulatory pressure underneath it all

It is no coincidence that this rollout is happening in Europe. Regulators there have made it clear that platforms must demonstrate real enforcement of child safety rules, not just publish policies.

What is interesting is how that pressure is shaping technology itself. Instead of banning features or imposing blunt restrictions, regulation is pushing companies to redesign their systems.

That is a quieter form of influence, but often a more effective one. It changes how platforms operate at a structural level, not just how they present themselves publicly.

Imperfect, but meaningful

No age detection system will ever be perfect. Behaviour does not map cleanly to age. Creative adults can look young online. Teenagers can behave like adults. Mistakes will happen.

The important thing is not perfection. It is intent and accountability.

By combining automated signals with human review, TikTok is acknowledging both the limits of technology and its potential. It is a more realistic approach than pretending age can be verified through a single form field.

Why this is worth noticing now

This is not about TikTok alone. It is about a broader shift in how digital spaces are governed.

We are moving away from an era where platforms simply hosted content and blamed users for misuse. We are entering one where platforms are expected to actively identify risk, intervene earlier and explain their decisions.

Most people will never read a regulation or a policy document. They will feel these changes through everyday experiences. An account being flagged. Content access changing. A platform asking different questions.

That is why this matters.

Not because it is dramatic or controversial, but because it shows how technology is quietly reshaping responsibility. And when it comes to children, safety and everyday digital life, quiet changes often have the biggest impact.

Leave a comment